Dr. Marco V. Benavides Sánchez.

Artificial Intelligence (AI) has revolutionized various sectors, and healthcare is no exception. From early disease detection to personalized treatment plans, AI has the potential to transform patient care and outcomes. However, the integration of AI into healthcare systems presents several challenges, particularly in balancing innovation with patient safety. This delicate balance involves numerous facets, including regulatory frameworks, liability concerns, data privacy, ethical considerations, patient safety frameworks, clinical implementation, and the constant tug-of-war between innovation and safety. This article explores these critical areas in-depth to provide a comprehensive understanding of how healthcare systems can leverage AI while ensuring the highest standards of patient safety.

1. Regulatory Frameworks: Setting the Standards for Safe AI Use in Healthcare

Regulatory bodies play a crucial role in ensuring that AI is used safely in healthcare settings. In the UK, the Medicines and Healthcare products Regulatory Agency (MHRA) has developed a strategic approach that includes several guiding principles: safety, security, transparency, fairness, accountability, and governance. These principles form the backbone of regulatory frameworks that aim to balance AI innovation with patient safety.

The MHRA’s strategy underscores the importance of clear guidelines for developing and implementing AI technologies in medicine. For example, the strategy mandates rigorous testing and validation of AI systems before they are deployed in clinical settings. By setting these standards, the MHRA and similar regulatory bodies worldwide provide a roadmap for AI developers and healthcare providers to follow, ensuring that new technologies do not compromise patient safety.

Regulatory frameworks are not just about rules and restrictions; they also encourage innovation by providing a clear and predictable environment for AI development. Innovators are more likely to invest in AI technologies if they understand the regulatory landscape and know that their innovations will be tested fairly and transparently. In this way, regulatory bodies play a dual role: protecting patient safety while fostering innovation in healthcare.

2. Liability and Accountability: Navigating the Legal Landscape of AI in Medicine

As AI becomes more integrated into healthcare, questions of liability and accountability become increasingly complex. Traditionally, liability in healthcare has focused on medical malpractice, where healthcare providers are held accountable for errors or negligence. However, the use of AI introduces new challenges in determining who is responsible when an AI system makes an error.

Current liability frameworks are often ill-equipped to handle these new challenges. For instance, if an AI tool provides an incorrect diagnosis or recommends an inappropriate treatment, should the blame lie with the healthcare provider who used the tool, the developer who created the tool, or the institution that deployed it? To address these concerns, policymakers are considering various options, such as altering the standard of care, creating new insurance models, or developing special adjudication systems for AI-related cases.

One potential solution is to establish a balanced liability system that fairly distributes responsibility among all parties involved. This could involve creating new legal categories specifically for AI-related incidents or expanding existing malpractice laws to include AI systems. In any case, the goal should be to ensure that patients are protected while not stifling innovation by placing an undue burden on AI developers and healthcare providers.

3. Data Privacy: Protecting Patient Information in the Age of AI

AI systems in healthcare require vast amounts of data to function effectively. This data is often sensitive, containing personal health information that needs to be protected to maintain patient privacy. Regulatory frameworks such as the Health Insurance Portability and Accountability Act (HIPAA) in the United States and the General Data Protection Regulation (GDPR) in the European Union set strict guidelines for handling such data.

However, the sheer volume of data needed for AI to operate effectively can create privacy challenges. For instance, AI systems that analyze electronic health records (EHRs) to predict patient outcomes require access to a wide range of patient information. To balance the need for data with privacy concerns, healthcare providers and AI developers are employing techniques like data anonymization and differential privacy.

Anonymization removes personally identifiable information from datasets, making it difficult to trace data back to individual patients. Differential privacy adds “noise” to data sets, providing robust privacy guarantees while still allowing AI systems to extract useful insights. By employing these techniques, healthcare providers can protect patient data while still enabling the innovation needed to improve patient care.

4. Ethical Considerations: Ensuring Fair and Just AI in Healthcare

The integration of AI into healthcare also raises numerous ethical concerns. One of the most pressing issues is ensuring that AI systems do not perpetuate biases, especially those that disadvantage minority communities. For example, if an AI system is trained primarily on data from a specific demographic group, it may not perform as well for patients from other groups. This can lead to disparities in healthcare outcomes and exacerbate existing inequalities.

Ethical frameworks guide the acceptable levels of risk and the pursuit of knowledge in AI applications. For instance, many ethical guidelines call for transparency in how AI systems make decisions, allowing healthcare providers and patients to understand the reasoning behind AI-driven recommendations. This transparency is crucial for maintaining trust in AI technologies, especially in high-stakes environments like healthcare.

Moreover, ethical frameworks often emphasize the importance of fairness in AI development and deployment. This includes ensuring that AI systems are trained on diverse datasets that represent a wide range of patient populations. By doing so, developers can help mitigate the risk of bias and ensure that AI technologies benefit all patients, not just a select few.

5. Patient Safety Frameworks: Measuring and Ensuring AI’s Impact on Safety

Ensuring patient safety is at the heart of any healthcare intervention, and AI is no exception. To this end, developing patient safety frameworks specifically for AI is essential. These frameworks can help measure the impact of AI on patient safety, from retrospective analysis to real-time monitoring and future predictive use.

Retrospective analysis involves looking back at historical data to understand how AI could have impacted patient outcomes. For example, researchers might analyze past surgeries to determine whether an AI tool could have predicted complications more accurately than human clinicians. Real-time monitoring, on the other hand, involves using AI to continuously assess patient data, alerting healthcare providers to potential safety issues as they arise. Future predictive use refers to AI’s ability to forecast patient outcomes, allowing clinicians to take proactive measures to improve safety.

These patient safety frameworks are crucial for understanding how AI can enhance safety while identifying potential risks. They provide a structured approach to evaluating AI’s impact, helping healthcare providers make informed decisions about when and how to use these technologies.

6. Clinical Implementation: The Role of AI in Predicting Patient Outcomes and Assisting Decision-Making

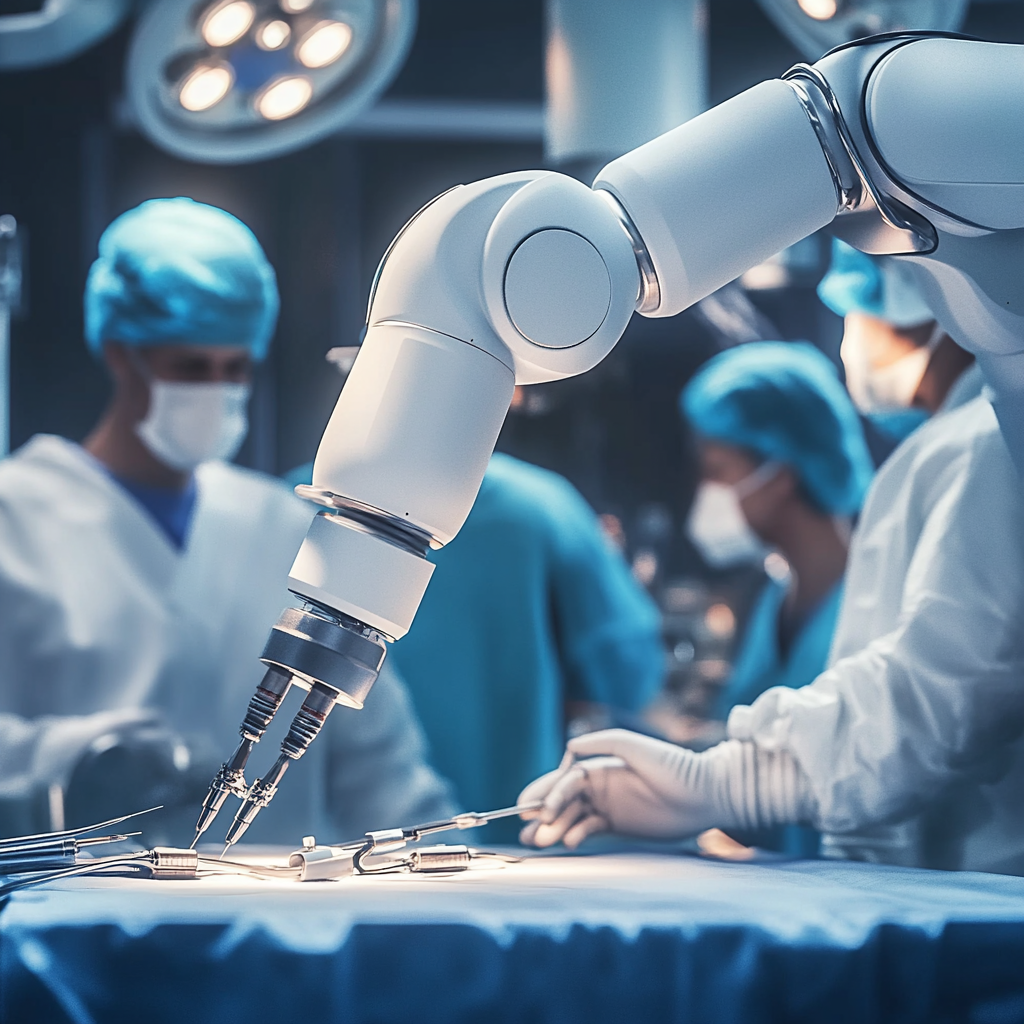

AI tools are increasingly being developed to assist in clinical decision-making, offering the potential to improve patient care significantly. For instance, AI algorithms can analyze large datasets to predict patient outcomes after surgery, helping doctors make better-informed decisions. These tools can also assist in diagnosing diseases, recommending treatments, and managing chronic conditions, providing clinicians with valuable insights that can improve patient outcomes.

However, the clinical implementation of AI is not without challenges. For one, AI tools must be rigorously tested and validated to ensure they are safe and effective. This involves not only laboratory testing but also real-world trials in diverse clinical settings. Moreover, clinicians must be trained to use these tools effectively, understanding both their capabilities and limitations.

The successful implementation of AI in clinical settings also requires collaboration between AI developers, healthcare providers, and regulatory bodies. By working together, these stakeholders can ensure that AI tools are developed and deployed in a way that maximizes patient benefits while minimizing risks.

7. Innovation vs. Safety: Striking the Right Balance

One of the most challenging aspects of integrating AI into healthcare is balancing innovation with safety. On the one hand, there is a need for rapid innovation to address pressing healthcare challenges, such as improving diagnostic accuracy, personalizing treatments, and managing public health crises. On the other hand, there is a need to ensure that AI systems are robust, reliable, and safe before they are widely implemented.

Achieving this balance involves several key strategies. First, AI systems must undergo rigorous testing and validation to ensure they meet the highest safety standards. This includes not only technical validation but also clinical validation, where AI tools are tested in real-world healthcare settings. Second, healthcare providers must be trained to use AI tools effectively, understanding both their strengths and limitations. Finally, regulatory bodies must continue to develop and refine frameworks that promote both innovation and safety.

By following these strategies, healthcare systems can harness the full potential of AI while ensuring patient safety remains a top priority.

Conclusion: A Dynamic and Evolving Field

The integration of AI into healthcare is a dynamic field that requires continuous evaluation and adaptation. As AI technologies continue to evolve, so too must the frameworks and strategies that govern their use. By focusing on regulatory frameworks, liability and accountability, data privacy, ethical considerations, patient safety frameworks, clinical implementation, and the balance between innovation and safety, healthcare systems can navigate the complexities of AI integration while ensuring the highest standards of patient care.

Ultimately, the goal is to create a healthcare system where AI enhances, rather than compromises, patient safety. Achieving this goal will require collaboration among AI developers, healthcare providers, policymakers, and patients. But with the right strategies and frameworks in place, the future of AI in healthcare looks promising, offering the potential to transform patient care and outcomes in ways we can only begin to imagine.

For further reading

(1) MHRA’s AI regulatory strategy ensures patient safety and industry ….

(2) Artificial Intelligence and Liability in Medicine: Balancing Safety and ….

(3) AI in Healthcare: Balancing Patient Data Privacy & Innovation – Dialzara.

(4) AI and Ethics: A Systematic Review of the Ethical Considerations of ….

(5) Bending the patient safety curve: how much can AI help? – Nature.

#Emedmultilingua #Tecnomednews #Medmultilingua

Leave a Reply